Setting up PETSc in OpenFOAM

Guide for coupling PETSc into OpenFOAM.

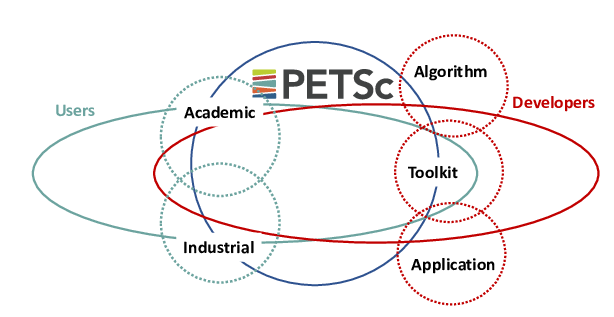

PETSc, also known as Portable, Extensible Toolkit for Scientific Computation, stands out as a powerful tool designed for the scalable (parallel) resolution of scientific applications governed by partial differential equations (PDEs). Recognized as the world’s most widely used parallel numerical software library for partial differential equations and sparse matrix computations, PETSc plays a pivotal role in tackling linear and nonlinear systems of equations stemming from discretizations of Partial Differential Equations. At the core of PETSc’s versatility is its organizational structure around mathematical concepts tailored for solving complex systems. This numerical library has found extensive use across various domains, and OpenFOAM is no exception. Within the realm of OpenFOAM, a dedicated library named petsc4Foam comes into play. This library introduces a solver that seamlessly integrates PETSc and its external dependencies, such as HYPRE, into diverse OpenFOAM simulations. petsc4Foam opens up a repertoire of solvers and preconditioners available not only in PETSc but also in its external libraries, like HYPRE.

Compilation steps for PETSc

Let’s walk through the steps to build or compile PETSc in OpenFOAM, a process applicable to both Ubuntu and Windows Subsystem for Linux (WSL):

- Start by sourcing your OpenFOAM environment. For instance:

source ~/OpenFOAM/OpenFOAM-v2312/etc/bashrc - Navigate to the ThirdParty folder:

foam cd $WM_THIRD_PARTY_DIR - Check if PETSc dependencies are present:

./makePETSCThis should prompt a warning indicating missing sources for PETSc. Note the version, e.g.,

petsc-x.xx.x.Missing sources: 'petsc-x.xx.x' Possible download locations for PETSC : https://www.mcs.anl.gov/petsc/ https://ftp.mcs.anl.gov/pub/petsc/release-snapshots/petsc-lite-x.xx.x.tar.gzHere,

petsc-x.xx.xis basically the version of PETSc, like for examplepetsc-3.19.2. In my case I will use the latest version, which ispetsc-3.19.2. - Download the PETSc source files using the provided link in the warning of the last step, just swap in the version of PETSc you require:

wget https://ftp.mcs.anl.gov/pub/petsc/release-snapshots/petsc-lite-3.19.2.tar.gzThis will download a file named

petsc-lite-3.19.2.tar.gz. You will need to first un-tar or un-zip the file by using the commandtar -xzvf petsc-lite-3.19.2.tar.gzThis step should generate a folder named

petsc-3.19.2 - Install essential packages - BLAS and LAPACK:

sudo apt-get install libblas-dev liblapack-dev - Build PETSc:

./makePETSCThis step will take about 5 minutes, depending on your system configuration, and show up as follows

+ ./configure --prefix=/home/ubuntu-students/OpenFOAM/ThirdParty-v2312/platforms/linux64GccDPInt32/petsc-3.19.2 --PETSC_DIR=/home/ubuntu-students/OpenFOAM/ThirdParty-v2312/petsc-3.19.2 --with-petsc-arch=DPInt32 --with-clanguage=C --with-fc=0 --with-x=0 --with-cc=/usr/bin/mpicc --with-cxx=/usr/bin/mpicxx --with-debugging=0 --COPTFLAGS=-O3 --CXXOPTFLAGS=-O3 --with-shared-libraries --with-64-bit-indices=0 --with-precision=double --download-hypre Using python3 for Python =============================================================================== Configuring PETSc to compile on your system =============================================================================== =============================================================================== Trying to download git://https://github.com/hypre-space/hypre for HYPRE =============================================================================== =============================================================================== Running configure on HYPRE; this may take several minutes =============================================================================== =============================================================================== Running make on HYPRE; this may take several minutes =============================================================================== - If not loaded automatically, set up PETSc environment:

export PETSC_DIR=~/OpenFOAM/ThirdParty-v2312/petsc-3.19.2/ export PETSC_ARCH=DPInt32 export LD_LIBRARY_PATH=$PETSC_DIR/$PETSC_ARCH/lib:$LD_LIBRARY_PATH - Navigate to the OpenFOAM working directory and build petsc4Foam:

foam # navigate to working directory cd modules/external-solver # navigate to the external_solver directory ./Allwmake -prefix=openfoam # Install everything in FOAM_LIBBIN - Verify PETSc libraries can be found and loaded:

foamHasLibrary -verbose petscFoamThe output of this command should be

Can load "petscFoam". - Before running any simulations using PETSc, it’s essential to set up the PETSc environment. This can be done by executing the commands provided in Step-7 or by using the following command:

eval $(foamEtcFile -sh -config petsc -- -force)

First Steps

Once the PETSc library seamlessly integrates into OpenFOAM, you can validate the installation by running a tutorial case found in the external-solver directory. Here are the steps to execute the case:

# Navigate to the tutorial directory

cd ~/OpenFOAM/OpenFOAM-v2312/modules/external-solver/tutorials/incompressible/simpleFoam/pitzDaily

# run the case using given run files

./Allrun

The output of this process should resemble the following:

Copying incompressible/simpleFoam/pitzDaily -> run/

Add libs (petscFoam) to controlDict

Rename fvSolution and relink to fvSolution-petsc

Running blockMesh on /home/goswami/OpenFOAM/OpenFOAM-v2312/modules/external-solver/tutorials/incompressible/simpleFoam/pitzDaily/run

Running simpleFoam on /home/goswami/OpenFOAM/OpenFOAM-v2312/modules/external-solver/tutorials/incompressible/simpleFoam/pitzDaily/run

To ensure that the tutorial runs the pitzDaily case using petsc solvers, perform the following check:

cd run/

tail -n 20 log.simpleFoam

The output should display:

Time = 281

PETSc-bicg: Solving for Ux, Initial residual = 0.000144417, Final residual = 7.09155e-06, No Iterations 2

PETSc-bicg: Solving for Uy, Initial residual = 0.00099692, Final residual = 6.16609e-05, No Iterations 2

PETSc-cg: Solving for p, Initial residual = 0.000780479, Final residual = 7.3097e-05, No Iterations 68

time step continuity errors : sum local = 0.0034787, global = -9.27705e-05, cumulative = 0.676227

PETSc-bicg: Solving for epsilon, Initial residual = 0.000114612, Final residual = 4.76639e-06, No Iterations 2

PETSc-bicg: Solving for k, Initial residual = 0.000203968, Final residual = 1.19536e-05, No Iterations 2

ExecutionTime = 9.61 s ClockTime = 10 s

SIMPLE solution converged in 281 iterations

streamLine streamlines write:

seeded 10 particles

Tracks:10

Total samples:10885

End

Finalizing PETSc

Here, you can observe that velocity, k, and epsilon are solved using the PETSc-bicg solver, employing the Bi-conjugate Gradient method. The pressure is solved using PETSc-cg, utilizing the Preconditioned Conjugate Gradient (PCG) iterative method.

How to use PETSc in your simulations ?

To use PETSc solvers in your simulations, make two essential modifications:

- Add the PETSc library to

controlDict. Open yourcontrolDictfile and insert the following lines above the ‘application’ keyword:libs ( "petscFoam" ); - Edit your

fvSolutionfile and set the solver and preconditioner names in any solver topetsc. For instance, the solvers in thefvSolutionfile for the tutorial case executed in the last step should be configured as follows:”p { solver petsc; petsc { options { ksp_type cg; ksp_cg_single_reduction true; ksp_norm_type none; // With or without hypre #if 0 pc_type hypre; pc_hypre_type boomeramg; pc_hypre_boomeramg_max_iter "1"; pc_hypre_boomeramg_strong_threshold "0.25"; pc_hypre_boomeramg_grid_sweeps_up "1"; pc_hypre_boomeramg_grid_sweeps_down "1"; pc_hypre_boomeramg_agg_nl "1"; pc_hypre_boomeramg_agg_num_paths "1"; pc_hypre_boomeramg_max_levels "25"; pc_hypre_boomeramg_coarsen_type HMIS; pc_hypre_boomeramg_interp_type ext+i; pc_hypre_boomeramg_P_max "1"; pc_hypre_boomeramg_truncfactor "0.3"; #else pc_type bjacobi; sub_pc_type ilu; #endif } caching { matrix { update always; } preconditioner { update always; } } } tolerance 1e-06; relTol 0.1; } "(U|k|epsilon|omega|f|v2)" { solver petsc; petsc { options { ksp_type bicg; pc_type bjacobi; sub_pc_type ilu; } caching { matrix { update always; } preconditioner { update always; } } } tolerance 1e-05; relTol 0.1; }

Why use PETSc in your simulations ?

Ideally, when running OpenFOAM simulations, I’ll utilize a High-Performance Computing (HPC) cluster or, in simpler terms, a supercomputer. Most certainly, I’ll run my simulations in parallel, utilizing multiple cores. In OpenFOAM, parallel computation involves mesh and fields decomposition, parallel application execution, and post-processing of the decomposed case. The parallel execution utilizes the openMPI implementation of the Message Passing Interface (MPI). While OpenFOAM’s parallel performance is already commendable, there’s room for improvement. This is where PETSc steps in. PETSc offers exceptional scalability, allowing simulations to scale up to the order of millions of cores. Additionally, PETSc solvers outperform standard OpenFOAM solvers when simulations involve a large number of parallel cores.

For in-depth insights into PETSc, including performance, scalability, and metrics, refer to this informative Article..

Enjoy Reading This Article?

Here are some more articles you might like to read next: